Be more effective with your data – ElasticSearch

Posted Jun 18, 2014 | 6 min. (1159 words)A relational database engine is great at set based operations and has a very familiar and powerful querying syntax but we are increasingly facing data challenges where leveraging a relational model doesn’t make as much sense. In building Raygun we have to deal with a constant stream of exception data (100’s of millions per month) which we structure into errors and occurrences which have a simple 1:N relationship.

At first, using a relational model seemed to be a natural fit for this but over time we ran into three general problems:

- Querying over the occurrence data was performing poorly despite being strictly index based. E.g. To find all parents where they have a child occurrence matching a particular criteria.

- Producing aggregations of occurrence data was too costly to query in real time. Using a pre-aggregated bucket approach would add a massive overhead to our database size.

- Searching the data using FTS would require indexing most of the raw payload which is costly both in terms of indexing time and database size and our preference was to use Lucene.

Surely we must just need a more powerful database cluster? More RAM, more CPU? Sure, but what about when we add 10x our data volume?

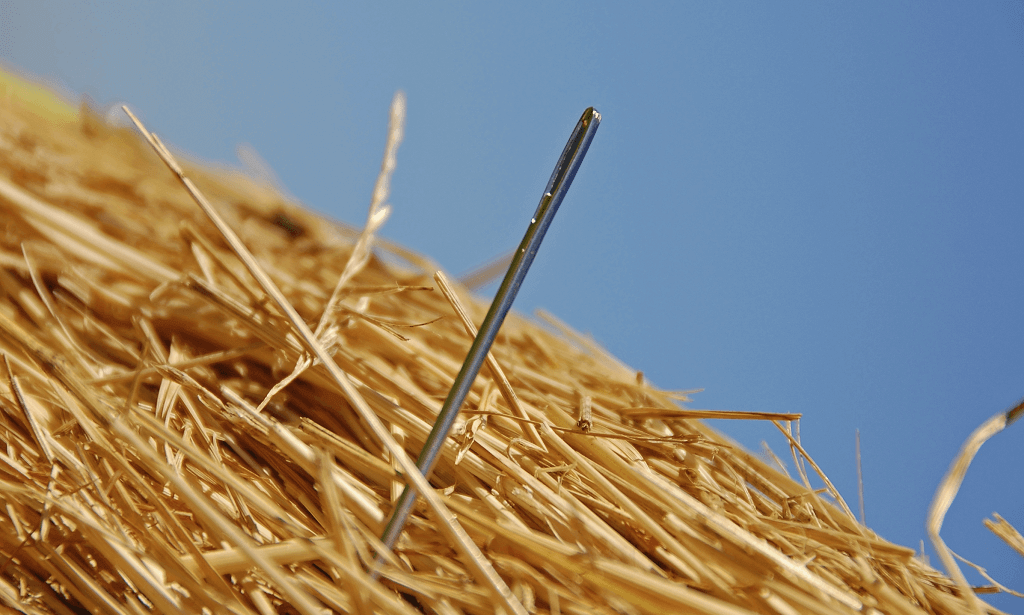

Rather than just blindly scaling, I always like to look around and see what everyone else is doing incase there is a better way. It turns out these are the type of problems that are handled nicely by ElasticSearch.

Given the product name you would have naturally concluded that ElasticSearch is a search server, so that sounds good if what you want is just full text search via an http endpoint, and this was how we initially deployed it with Raygun. Over time however we quickly realised that it offers very powerful and efficient querying which makes it ideal for not just search but also quickly filtering sets and performing aggregations (e.g. for analytics) over very large sets of data. Not just that it is incredibly simple to approach.

Filtering

To fetch a list of parents, where they have a child occurrence matching a particular criteria, we want to first filter down to the occurrences matching the criteria and then group the results based on the parent error. This logically maps to a GROUP BY with a WHERE clause in the query in SQL.

This is nice and simple to achieve using ElasticSearch. ElasticSearch natively talks JSON and presents a very sane RESTful API for interaction. There are a number of native providers to deal with API interactions, so for my code example I am using C# and the NEST provider for .NET as it exposes a nice fluent interface which logically maps back to the underlying JSON you would provide in a raw request.

elastic.Search(s => s

.Type("occurrence")

.Filter(f => f.OnField("SomeProperty").Equals("SomeValue"))

.FacetTerm(f => f.OnField("errorId"));This will find all occurrences where the property matches the value by using through a Filter and will then bucket the results into groupings based on the error they belong to by using the Term Facet. The end result is that we will have a list of all of the parents matching the predicate and a count of the number of occurrences under each error that match it.

Aggregations

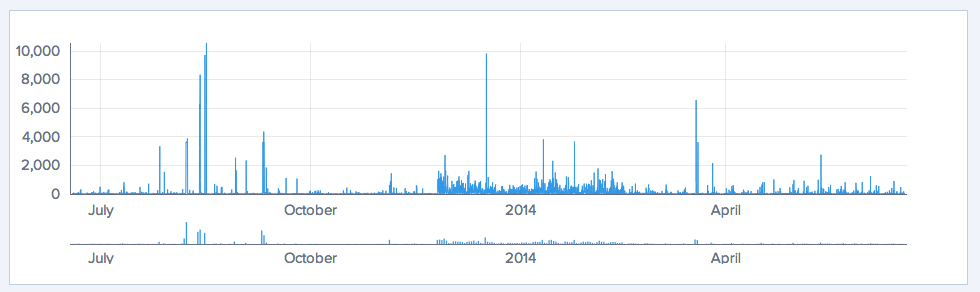

An example of an aggregation we use in Raygun is the error graph on the application dashboard. We provide numbers in hourly buckets. To extract that data using ElasticSearch we can use the DateHistogram facet.

elastic.Search(s => s

.Type("occurrence")

.FacetDateHistogram(f => f.OnField("occurredOn"));In ElasticSearch 1.1 there is now a newer framework known as aggregations to specify the same thing, with facets being deprecated in a future version. If you are using C# and NEST you will need to use the 1.0 version or later to pick up support for these.

Search

When you hit a requirement like “search” for an application you face the unenviable task of having your application suddenly compete with Google or Bing in terms of speed and quality of results. Full text search providers in relational databases generally leave a lot to be desired. Using something more specialized such as Apache Lucene is the way to go which fits in perfectly for ElasticSearch since that’s what it uses, and that’s what it performs fantastically at.

When specifying a search for Elastic we need to define a query. These ultimately map down to Lucene query operations so have a good read through the documentation to understand what options you have available.

elastic.Search(s => s

.Type("occurrence")

.Query(q => q.QueryString(qs => qs.OnField(property).Query(value).Operator(Operator.and)))

.Sort(f => f.OnField("occurredOn").Descending())

.Sort(f => f.OnField("errorId").Descending())

.From(start)

.Size(count)One important concept to keep in mind with how search operates in Elastic is that when you define your mapping you determine what is going to be indexed for search and how it is going to be broken down into tokens for later matching. By default you can throw data at ElasticSearch and it will infer a mapping for you – thats useful, but remember you may want aspects of your data that will be searched by a human and some which will be filtered on or where you just want to store a value. You can control all of this via your mappings.

Here is an example of how we can control this again using C# and NEST:

[ElasticType(Name = "occurrence")]

public class OccurrenceMapping

{

// Storing the value and indexing for search

[ElasticProperty(Store = true)]

public DateTime OccurredOn { get; set; }

// Indexing for search but not storing the raw value

[ElasticProperty(Store = false)]

public string Browser { get; set; }

// Storing the raw value, not indexing for search

[ElasticProperty(Store = true, Index = FieldIndexOption.not_analyzed)]

public string Provider { get; set; }

}For data you are indexing for search you will want to investigate how your data is being broken down to better understand how search terms will match to results. This process is known as analysis and inbound data is then broken down into tokens through a tokenizing process. ElasticSearch offers a number of standard analyzers and tokenizers – e.g. always lower case everything or strip common words – or you can take complete control of the process by implementing a custom implementation for one or both of those concerns.

Get using it!

ElasticSearch has been fantastic for us with Raygun, and we still have quite a number of features we are looking to ship which will take advantage of the flexibility and performance it offers. A large part of our domain is still persisted in a relational structure, but we are all now appreciating that data at scale doesn’t just play well in the confines of a single sandbox.

Leveraging the appropriate tool whether it be key/value, document based or relational depends on the data and what you need to do with it. If you are looking at large volumes of data and need to solve problems around search and analytics I think you will find ElasticSearch a very handy addition to your toolkit.